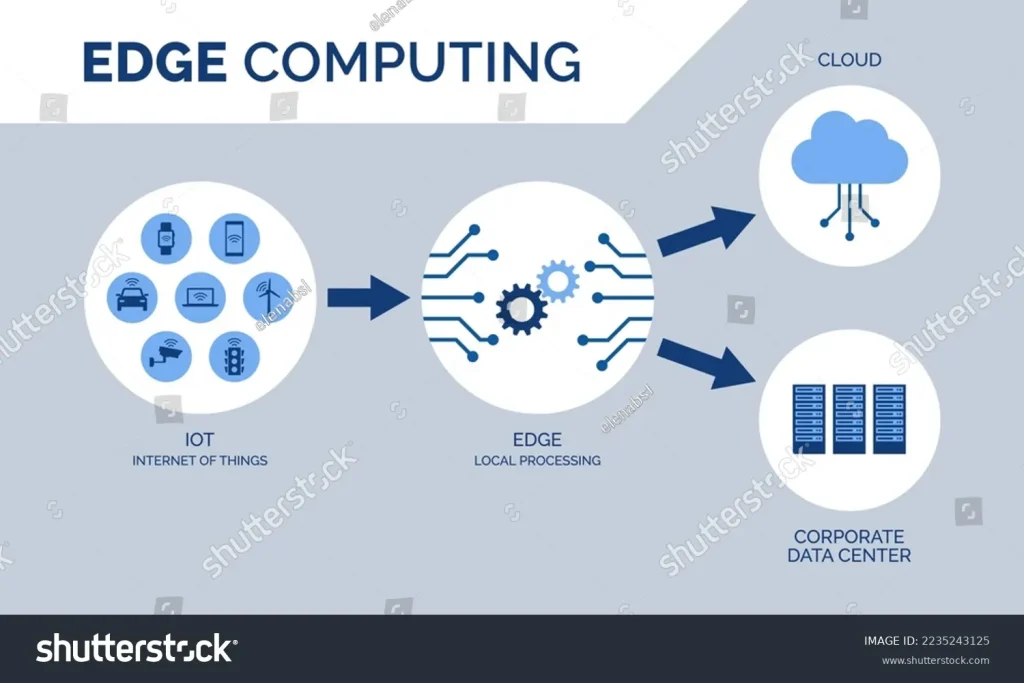

Edge Computing is redefining where data is processed, analyzed, and acted upon, bringing computation closer to the data source and enabling near-instant insights for applications ranging from manufacturing control to smart city services. As devices proliferate—from industrial sensors to autonomous systems—organizations monitor edge computing trends to balance local intelligence with centralized capabilities. This shift moves compute and storage toward the edge, reducing latency, easing bandwidth pressures, and enabling context-aware automation that can respond within milliseconds. Crucially, edge computing complements the cloud, forming a continuum where edge nodes perform real-time tasks, while the cloud handles heavy analytics, training, and archiving. For organizations, embracing this distributed architecture demands governance, security, and interoperable platforms that preserve visibility across the entire edge-to-cloud ecosystem.

Viewed through a different lens, this approach is about distributed processing at the network edge, pushing analytics and inference toward gateways, sensors, and local devices. In practical terms, organizations deploy micro data centers and edge nodes that perform initial filtering, anomaly detection, and rapid decision-making without constantly routing data upstream. This near-data processing reduces backhaul traffic and supports privacy by keeping sensitive information closer to its source, while still enabling deeper cloud-level insights when needed. From an architectural perspective, terms such as near-edge, fog computing variants, and on-device AI reflect the same trend of localized computation designed for resilience. As a result, stakeholders should plan for interoperable platforms, robust security at the edge, and clear data governance to maximize value across distributed environments.

Edge Computing: Accelerating Real-Time Data Processing at the Edge and Complementing the Cloud

Edge Computing is transforming how data is handled by moving compute and storage closer to the data source, enabling real-time data processing at the edge and near-immediate insights. As IoT edge computing expands across industrial sensors, autonomous vehicles, and smart devices, latency drops and bandwidth usage falls. This aligns with edge computing trends that emphasize local processing while still leveraging the cloud in a cloud-to-edge continuum.

By distributing workloads between edge nodes, micro data centers, and gateways, organizations can blend on-device inference and local analytics with centralized capabilities. This supports a hybrid architecture—edge vs cloud computing—where tasks are allocated based on latency sensitivity, data locality, and regulatory considerations. Technologies like containerization, lightweight AI runtimes, MEC, and the ongoing rollout of 5G and Wi‑Fi 6/7 enable scalable edge deployments without sacrificing security, privacy, or governance.

Edge Computing Security and Governance for IoT Edge Computing and Industry 4.0

Security remains a central concern in Edge Computing. The distributed nature of edge nodes expands the attack surface across devices, gateways, and networks, so a defense-in-depth approach is essential. Implementing device identity, mutual authentication, secure boot, encrypted communications, and zero-trust principles helps protect real-time processing at the edge and the integrity of edge analytics. Data sovereignty and privacy are enhanced when sensitive information is kept closer to the source, with robust encryption, tamper detection, and secure update mechanisms to prevent drift or tampering.

From an operational perspective, orchestration and observability are critical for scalable edge deployments. Lightweight container runtimes, edge-specific orchestration layers, and strong data governance support secure management of distributed resources. Embracing interoperable platforms and open standards reduces vendor lock-in while optimizing latency, bandwidth, and regulatory compliance across the IoT edge computing landscape.

Frequently Asked Questions

How do edge computing trends influence decisions about processing data at the edge versus the cloud?

Edge computing trends point to a cloud-to-edge continuum where workloads are moved closer to data sources to reduce latency and save bandwidth. For real-time data processing at the edge, inference and lightweight analytics run on edge nodes such as gateways or micro data centers, delivering near-instant insights—even with intermittent connectivity. The cloud remains essential for heavy compute, long-term storage, and centralized coordination, so a hybrid approach often yields the best balance of latency, governance, and cost.

What is the difference between edge vs cloud computing in the context of IoT edge computing, and how does real-time data processing at the edge enable smarter devices?

Edge vs cloud computing reflects where workloads execute: the edge handles low-latency inference and local decision making, while the cloud handles heavy analytics and large-scale training. IoT edge computing brings sensors and devices onto an edge network to perform initial data fusion, anomaly detection, and safety checks near the source. Real-time data processing at the edge enables immediate actions, reduces bandwidth, and improves resilience when connectivity is limited, with cloud resources supporting model updates and centralized management.

| Key Point | Description |

|---|---|

| Definition | Edge Computing moves compute and storage closer to data sources to reduce latency and optimize bandwidth, complementing cloud. |

| Transformative impact | Enables near-instant insights and real-time decision making by processing data at or near sensors, gateways, or devices, even with intermittent connectivity. |

| Core components | Edge nodes, micro data centers, and edge gateways; containerization, orchestration, and lightweight AI runtimes. |

| Relation to cloud | Not replacement, but a cloud-to-edge continuum; division of labor to optimize latency, security, and cost. |

| Key benefits | Lower latency, bandwidth savings, data sovereignty, privacy, and edge-enabled AI for on-device decision making. |

| Real-world use cases | Manufacturing, logistics, smart cities, healthcare; real-time monitoring, anomaly detection, safety checks. |

| Trends & architecture | 5G/MEC, cloud-to-edge continuum, hybrid topologies, AI at the edge, and specialized hardware (edge GPUs/NPUs/FPGAs). |

| Security & governance | Defense-in-depth, mutual authentication, encryption, zero-trust, and data governance across jurisdictions. |

| Challenges | Distributed orchestration, scalable deployment, observability, data governance, and avoiding vendor lock-in. |

| Guidance for architects | Start with a small use case, ensure data locality, security by design, interoperable platforms, and scalable edge infrastructure. |

Summary

Edge Computing is a practical and strategic approach to modern technology challenges. It enables real-time data processing at the edge, supports IoT-driven innovation, and complements cloud computing to deliver faster, more reliable, and more secure services. As organizations continue to deploy edge nodes across factories, campuses, and cities, the value of a well-planned edge strategy becomes increasingly clear. By understanding edge computing trends, recognizing when to push workloads to the edge versus the cloud, and investing in secure, interoperable infrastructure, businesses can unlock new capabilities and stay ahead in the fast-evolving technology landscape.